Forex Trading

Neural Networks: The XOR Problem

Contents:

If the two inputs are identical, the XOR function returns a false value. The following table shows the inputs and outputs of the XOR function. To train our perceptron, we must ensure that we correctly classify all of our train data. Note that this is different from how you would train a neural network, where you wouldn’t try and correctly classify your entire training data. That would lead to something called overfitting in most cases.

It will make network symmetric and thus the neural network looses it’s advantages of being able to map non linearity and behaves much like a linear model. This is an example of a simple 3-input, 1-output neural network. As we talked about, each of the neurons has an input, $i_n$, a connection to the next neuron layer, called a synapse, which carries a weight $w_n$, and an output layer.

Neural networks are complex to code compared to machine learning models. If we compile the whole code of a single-layer perceptron, it will exceed 100 lines. To reduce the efforts and increase the efficiency of code, we will take the help of Keras, an open-source python library built on top of TensorFlow. Designing a neural network in terms of writing code will be very hectic and unreadable to the users. Escaping all the complexities, data professionals use python libraries and frameworks to implement models. But we are designing an elementary neural network, so we will build it without using any framework like TensorFlow and PyTorch.

Parameters Evolution

So you may need to try, check results and then re-start. I suggest you use a seeded random number generator for initialisation, and adjust the seed value if error values get stuck and do not improve. Following the development proposed by Ian Goodfellow et al, let’s use the mean squared error function for the sake of simplicity. There are large regions of the input space which are mapped to an extremely small range. In these regions of the input space, even a large change will produce a small change in the output. Polaris000/BlogCode/xorperceptron.ipynb The sample code from this post can be found here.

Squared Error LossSince, there may be many weights contributing to this error, we take the partial derivative, to find the minimum error, with respect to each weight at a time. The change in weights are different for the output layer weights (W31 & W32) and different for the hidden layer weights . Backpropagation is a way to update the weights and biases of a model starting from the output layer all the way to the beginning. The main principle behind it is that each parameter changes in proportion to how much it affects the network’s output.

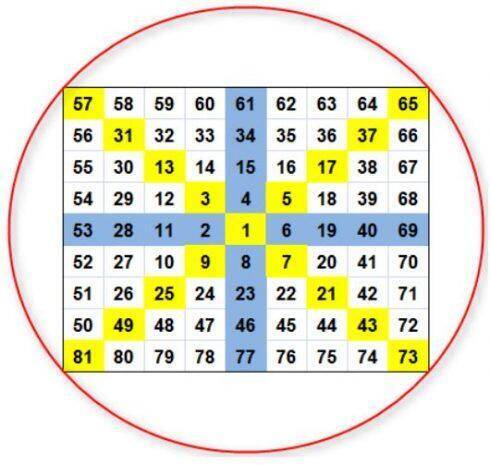

There are several workarounds for this problem which largely fall into architecture (e.g. ReLu) or algorithmic adjustments (e.g. greedy layer training). It is very important in large networks to address exploding parameters as they are a sign of a bug and can easily be missed to give spurious results. It is also sensible to make sure that the parameters and gradients are cnoverging to sensible values. Furthermore, we would expect the gradients to all approach zero. In larger networks the error can jump around quite erractically so often smoothing (e.g. EWMA) is used to see the decline. Two lines is all it would take to separate the True values from the False values in the XOR gate.

More than only one neuron , the return (let’s use a non-linearity)

We have some instance variables like the training data, the target, the number of input nodes and the learning rate. In the image above we see the evolution of the elements of \(W\). Notice also how the first layer kernel values changes, but at the end they go back to approximately one. I believe they do so because the gradient descent is going around a hill (a n-dimensional hill, actually), over the loss function.

Scalable true random number generator using adiabatic … – Nature.com

Scalable true random number generator using adiabatic ….

Posted: Mon, 21 Nov 2022 08:00:00 GMT [source]

XOR classes do not have linear separability due to this. Apart from the usual visualization and numerical libraries , we’ll use cycle from itertools . This is done since our algorithm cycles through our data indefinitely until it manages to correctly classify the entire training data without any mistakes in the middle.

The weight of -2 from the hidden neuron to the output one insures that the output neuron will not come on when both input neurons are on (ref. 2). We will update the parameters using a simple analogy presented below. Lalit Pal is an avid learner and loves to share his learnings. He is a Quality Analyst by profession and have 12 years of experience.

XOR-Gate-With-Neural-Network-Using-Numpy

The most important thing to remember from this example is the points didn’t move the same way . That effect is what we call “non linear” and that’s very important to neural networks. Some paragraphs above I explained why applying linear functions several times would get us nowhere. Visually what’s happening is the matrix multiplications are moving everybody sorta the same way .

Programming and training rate-independent chemical reaction … – pnas.org

Programming and training rate-independent chemical reaction ….

Posted: Thu, 09 Jun 2022 07:00:00 GMT [source]

Further, to assess the applicability and generalization of our proposed single neuron model, we have varied the input dimension and no. of input samples in training the proposed model. We have considered three different cases having 103, 104, and 106 samples in the dataset, respectively. Results show that the loss depends upon the no. of samples in the dataset. The hidden layer performs non-linear transformations of the inputs and helps in learning complex relations.

Not the answer you’re looking for? Browse other questions tagged pythonneural-networkxorkeras or ask your own question.

Hopefully, this post gave you some idea on how to build and train perceptrons and vanilla networks. Its derivate its also implemented through the _delsigmoid function. Adding input nodes — Image by Author using draw.ioFinally, we need an AND gate, which we’ll train just we have been. Here, we cycle through the data indefinitely, keeping track of how many consecutive datapoints we correctly classified. If we manage to classify everything in one stretch, we terminate our algorithm. We know that a datapoint’s evaluation is expressed by the relation wX + b .

This picture should make it more clear, the values on the connections are the weights, the values in the neurons are the biases, the decision functions act as 0/1 decisions . Initialize the value of weight and bias for each layer. To design a hidden layer, we need to define the key constituents again first. This is our final equation when we go into the mathematics of gradient descent and calculate all the terms involved. To understand how we reached this final result, see this blog.

As we can see, the Perceptron predicted the correct output for logical OR. Similarly, we can train our Perceptron to predict for AND and XOR operators. But there is a catch while the Perceptron learns the correct mapping for AND and OR. It fails to map the output for XOR because the data points are in a non-linear arrangement, and hence we need a model which can learn these complexities. Adding a hidden layer will help the Perceptron to learn that non-linearity. This is why the concept of multi-layer Perceptron came in.

The XOR function

There are a few techniques to avoid local minima, such as adding momentum and using dropout. #3 The https://traderoom.info/ problem is a difficult problem, to learn for a neural networ and it is not clear why your particular network struggle to perform efficiently with a topology. A converged result should have hyperplanes that separate the True and False values.

- These weights will need to be adjusted, a process I prefer to call “learning”.

- That said, I’ve got easily good results with or network architectures.

- Based on this comparison, the weights for both the hidden layers and the output layers are changed using backpropagation.

- We have some instance variables like the training data, the target, the number of input nodes and the learning rate.

- Where y_output is now our estimation of the function from the neural network.

We will take the help of NumPy, a xor neural network library famous for its mathematical operations and multidimensional arrays. Then we will switch to Keras for building multi-layer Perceptron. As I said, there are many different kinds of activation functions – tanh, relu, binary step – all of which have their own respective uses and qualities. We now have a neural network (albeit a lousey one!) that can be used to make a prediction.

Backpropagation was discovered for the first time in the 1980s by Geoffrey Hinton. Convolutional Neural Network was founded by entrepreneur Yann LeCun as a collaboration between his network LeNet-5 and CNN. CNN processed the input signal as a feature of visual data by compressing it. In 2006, researchers Hinton and Bengio discovered that neural networks with multiple layers could be trained efficiently by weight initialization. Egrioglu, “Threshold single multiplicative neuron artificial neural networks for nonlinear time series forecasting,” Journal of Applied Statistics, vol.

neural_nets

As a result, we create a Multi Layered Perceptron to solve this issue. To construct a perceptron, we must first comprehend that the XOr gate can be written in combination with AND gates, NOT gates and OR gates. We built a multilayered perceptron with the following weights and generated the XOr logical operator’s output using it.

The πt-neuron model has shown the appropriate research direction for solving the logical XOR and N-bit parity problems . The reported success ratio is ‘1’ for two-bit to six-bit inputs in . However, in the case of seven-bit input, the reported success ratio is ‘0.6’ only. Success ratio has been calculated by considering averaged values over ten simulations .

Also, for successful training in the case of seven-bit, it requires adjusting the trainable parameter (scaling factor bπ‒t) . This is also indicating the training issue in the case of higher dimensional inputs. Moreover, Iyoda et al. have suggested increasing the range of initialization for scaling factors in case of a seven-bit parity problem .

However, these are much simpler, in both design and in function, and nowhere near as powerful as the real kind. I mean when you design a neural network is it possible to understand what should be the number of neurons in hidden layer? Or you assume a large value, and then reduce to see the impact. BTW, when i implemented the XOR gate without one hot true outputs,, it could learn with 2 input, 2 hidden, 1 output.

These hidden layers help in learning the complex patterns in our data points. A linearly separable data set is one that can be separated into lines, planes, and points in 1D, 2D, and 3D. Perceptrons can only be combined when linear data converges to form a perceptron. As a result, it is incapable of performing XOR functions. The XOR problem had to be solved using a hidden layer of perceptrons. However, due to the limitations of modern computing, this was rarely possible.

Perceptrons are used to divide n-dimensional spaces into two regions known as True or False. A 1 is fired by a neuron if it is voltage-washed (e.g., there is enough voltage to fire but no action is taken). As part of backpropagation, we backfeed our errors from the final output neuron into the weights, which are then adjusted.

It is obvious here that the classes in two-dimensional XOR data distribution are the areas formed by two of the axes ‘X1’ and ‘X2’ . Furthermore, these areas represent respective classes simply by their sign (i.e., negative area corresponds to class 1, positive area corresponds to class 2). In this blog, we will first design a single-layer perceptron model for learning logical AND and OR gates. Then we will design a multi-layer perceptron for learning the XOR gate’s properties. XOR is a classical problem in the artificial neural network .

For learning to happen, we need to train our model with sample input/output pairs, such learning is called supervised learning. Supervised learning approach has given amazing result in deep learning when applied to diverse tasks like face recognition, object identification, NLP tasks. Most of the practically applied deep learning models in tasks such as robotics, automotive etc are based on supervised learning approach only. Other approaches are unsupervised learning and reinforcement learning.